人工智能监管:2023年企业需要知道什么

The idea of artificial intelligence, or synthetic minds able to think and reason in ways that approximate human capabilities, has been a theme for centuries. Many ancient cultures expressed ideas and even pursued such goals. In the early 20th century, science fiction began to crystallize the notion for large modern audiences. Stories such as the Wizard of Oz and films like Metropolis struck a chord with audiences around the world.

In 1956, John McCarthy and Marvin Minsky hosted the Dartmouth Summer Research Project on Artificial Intelligence, during which the term artificial intelligence was coined and introduced, and the race for practical ways of realizing the old dream began in earnest. The next five decades would see AI developments and enthusiasm wax and wane , but as the computational power driving the Digital Age grew exponentially while computation costs dropped precipitously, AI moved definitively from the realm of science fictional speculation to technological reality. In the early 2000s, investors poured massive amounts of funding into accelerating and deepening the capacities of AI systems.

Recent tech breakthroughs underscore importance of AI regulation

In 2023, generative artificial intelligence systems — tools capable of producing new and original content such as images, text or audio — are ubiquitous in public discourse. Companies are scrambling to understand, embrace and effectively implement ChatGPT-4 and other large language models and algorithms. The potential benefits for companies of all sizes have come into sharp focus: greater efficiencies in processes, reductions in human errors, reduced costs through automation and discovery of unknown and unanticipated insights in the massive and growing heaps of proprietary and public domain data.

Unsurprisingly, governments are laser-focused on regulating AI. Perennial concerns such as consumer protection, civil liberties and fair business practices partly explain the interest of governments around the world in AI.

But at least as important as safeguarding the citizens against the downsides of AI is the competition among governments for supremacy in AI. Attracting brains and businesses necessarily means creating predictable and navigable regulatory environments in which AI enterprises can thrive.

In effect, governments are confronting an AI regulatory Scylla and Charybdis: on the one hand, seeking to protect citizens from the very real downsides of AI implementation at scale, and on the other, needing to engineer governance regimes for a complex and rapidly changing AI shock wave that is permeating society.

Worldwide race to regulate AI systems

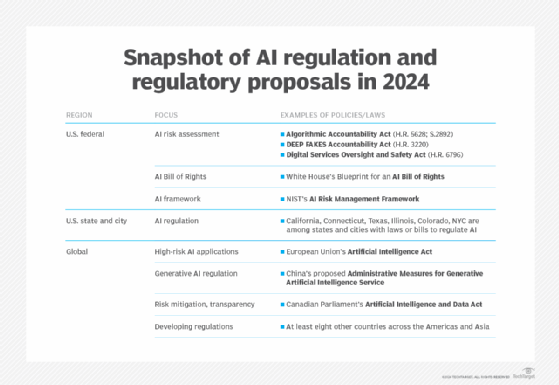

Amid the rapid evolution and adoption of AI tools, AI regulations and regulatory proposals are spreading almost as rapidly as AI applications.

U.S.

In the U.S., government at virtually every level is actively working to implement new regulatory protections and related frameworks and policies designed to simultaneously cultivate AI development and curb AI-enabled societal harms.

Federal regulation. AI risk assessment is presently a top priority for the federal government. U.S. lawmakers are particularly sensitive to the difficulties of understanding how algorithms are created and how they arrive at certain outcomes. So-called black box systems make it difficult to mitigate risk or map processes and enable documentation of their effects on citizens.

To address these issues, the Algorithmic Accountability Act (H.R. 6580; S.3572) is presently being debated in Congress. If it becomes law, it would require entities that use generative AI systems in critical decisions pertaining to housing, healthcare, education, employment, family planning and personal areas of life to determine impacts on citizens before and after the algorithms are used.

Similarly, the DEEP FAKES Accountability Act (H.R. 2395) and the Digital Services Oversight and Safety Act (H.R. 6796), should they make it through Congress and becomes enforced laws, would require entities to be transparent about the creation and public release of “false personation[s]” and mis/disinformation created by generative AI, respectively.

And although it is not a law, the White House’s Blueprint for an AI Bill of Rights is a formidable source of federal governance in the AI space. The Blueprint comprises a set of five principles — safe and effective systems; algorithmic discrimination protections; data privacy; notice and explanation; and human alternatives, considerations and fallback — that are intended to be voluntarily adopted by businesses and integrated into the very design of their AI systems.

Combined with the AI Risk Management Framework promulgated by the National Institute of Standards and Technology, the U.S. federal government has effectively created a powerful set of policies designed to protect Americans. Given that this guidance represents the largest employer in the U.S. and that it shapes the actions of most federal government units and the bulk of their employees, while simultaneously exerting pressure on private entities to come into compliance with its AI policy agenda, these “voluntary” proposals and principles should not be underestimated.

U.S. state and city regulation. States, too, are joining the race to regulate AI. California, Connecticut, Texas and Illinois are each attempting to strike the same balance as the federal government: encouraging innovation, while protecting constituents from AI downsides. Arguably, Colorado has gone furthest with its Algorithm and Predictive Model Governance Regulation, which would impose nontrivial requirements on the use of AI algorithms by Colorado-licensed life insurance companies.

Similarly, municipalities are moving ahead with their own AI ordinances. New York City is leading the way with Local Law 144 , which focuses on automated employment decision tools — that is, AI used in the context of human resource activities. Other U.S. cities are bound to follow the Empire City’s lead in short order.

Global

In Europe, the dominant source of AI governance is the European Union’s Artificial Intelligence Act. Like the US’ AI Blueprint, the EU’s AI Act’s main objective is to require developers who create or work with AI applications associated with high risk to test their systems, document their use of them and to mitigate risks by taking appropriate safety measures. The EU AI act is expected to pass before the end of 2023 and apply to all 27 countries contained within it. Importantly, the EU AI Act will likely generate a “Brussels Effect,” or significant regulatory reverberations well beyond the bounds of Europe.

China’s Cyberspace Administration is presently seeking public comment on proposed Administrative Measures for Generative Artificial Intelligence Service that would regulate a range of services provided to residents of mainland China.

The Canadian Parliament is currently debating the Artificial Intelligence and Data Act, a legislative proposal designed to harmonize AI laws across its provinces and territories, with particular focuses on risk mitigation and transparency.

Across the Americas and Asia, at least eight other countries are at various stages of developing their own regulatory approaches to governing AI.

Potential impact of AI regulation on companies in 2023

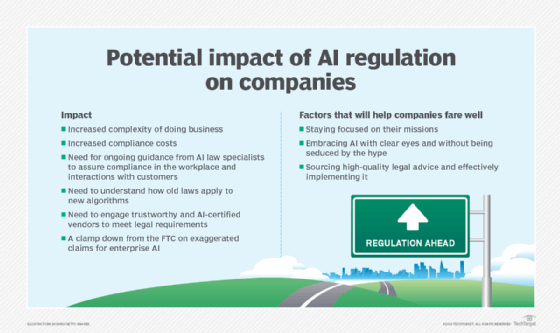

For companies located in, doing business in or searching for talent in one or more of the above-mentioned jurisdictions, the significance of this regulatory ferment is twofold.

First, companies should anticipate a continued and perhaps accelerated rate of arrival of new regulatory proposals and enforced laws across all major jurisdictions, and across all levels of government, over the next 12 to 18 months.

This crescendo of regulatory activity will very likely lead to at least two predictable outcomes: increased complexity of doing business, and increased compliance costs. A veritable thicket of AI regulation will require new expertise and likely regular updating from AI law specialists in order to assure workplace compliance as well compliance in engagements with clients and customers.

A particularly thorny issue will likely be the potential interactions between AI policies and preexisting regulations. For example, in the U.S. context, the Federal Trade Commission has clearly signaled its intention to clamp down on exaggerated claims for enterprise AI. Companies will need to be especially aware of the ways that old laws apply to new algorithms, rather than simply focusing on the new laws that contain “AI” in their titles.

As well, businesses will need to be extremely careful in engaging with newly minted vendors emerging to facilitate the soon to be common legal requirements for various types of AI use certification. Finding trustworthy vendors, or in all likelihood certified certifiers, will be essential to not only complying with the new laws, but also signaling to consumers that they are as safe as possible, and to satisfying business insurers that companies are effectively managing AI risks.

The immediate future of AI regulation will almost certainly be a bumpy road. Companies that stay focused on their missions, embrace AI with clear eyes and without being seduced by the hype, while simultaneously sourcing high quality legal advice and effectively implementing it, will fare well.