企业人工智能指南

The application of artificial intelligence in the enterprise is profoundly changing the way businesses work. Companies are incorporating AI technologies into their business operations with the aim of saving money, boosting efficiency, generating insights and creating new markets.

There are AI-powered enterprise applications to enhance customer service , maximize sales, sharpen cybersecurity , optimize supply chains, free up workers from mundane tasks, improve existing products and point the way to new products. It is hard to think of an area in the enterprise where AI — the simulation of human processes by machines, especially computer systems — will not have an impact. Enterprise leaders determined to use AI to improve their businesses and ensure a return on their investment, however, face big challenges on several fronts:

- The domain of artificial intelligence is changing rapidly because of the tremendous amount of AI research being done. The world’s biggest companies, research institutions and governments around the globe are supporting major research initiatives on AI.

- There are a multitude of AI use cases: AI can be applied to any problem facing a company or to humankind writ large. In the COVID-19 outbreak, AI has played an important role in the global effort to contain the spread, detect hotspots, improve patient care, identify therapies and develop vaccines. As businesses have emerged from the pandemic, investment in AI-enabled hardware and software robotics is expected to surge as companies strive to build resilience against other catastrophes.

- To reap the value of AI in the enterprise, business leaders must understand how AI works, where AI technologies can be aptly used in their businesses and where they cannot — a daunting proposition because of AI’s rapid evolution and multitude of use cases.

This wide-ranging guide to artificial intelligence in the enterprise provides the building blocks for becoming successful business consumers of AI technologies. It starts with introductory explanations of AI’s history, how AI works and the main types of AI. The importance and impact of AI is covered next, followed by information on the following critical areas of interest to enterprise AI users:

- AI’s key benefits and risks;

- current and potential AI use cases;

- building a successful AI strategy ;

- necessary steps for implementing AI tools in the enterprise; and

- technological breakthroughs that are driving the field forward.

Throughout the guide, we include hyperlinks to TechTarget articles that provide more detail and insights on the topics discussed.

What are the origins of artificial intelligence?

The modern field of AI is often dated to 1956, when the term artificial intelligence was coined in the proposal for an academic conference held at Dartmouth College that year. But the idea that the human brain can be mechanized is deeply rooted in civilization.

Myths and legends, for example, are replete with statues that come to life. Many ancient cultures built human-like automata that were believed to possess reason and emotion. By the first millennium B.C., philosophers in various parts of the world were developing methods for formal reasoning — an effort built upon over the next 2,000-plus years by contributors that also included theologians, mathematicians, engineers, economists, psychologists, computational scientists and neurobiologists.

Below are some milestones in the long and still elusive quest to recreate the human brain; a TechTarget graphic (below) depicts the pioneers of modern AI, from British mathematician and World War II codebreaker Alan Turing to the inventors of the new transformer neural networks that promise to revolutionize natural language processing:

- Early notables who strove to describe human thought as symbols — the foundation for AI concepts such as general knowledge representation — include the Greek philosopher Aristotle, the Persian mathematician Muḥammad ibn Mūsā al-Khwārizmī, 13th-century Spanish theologian Ramon Llull, 17th-century French philosopher and mathematician René Descartes, and the 18th-century clergyman and mathematician Thomas Bayes.

- The rise of the modern computer is often traced to 1836 when Charles Babbage and Augusta Ada Byron, Countess of Lovelace, invented the first design for a programmable machine. A century later, in the 1940s, Princeton mathematician John von Neumann conceived the architecture for the stored-program computer: This was the idea that a computer’s program and the data it processes can be kept in the computer’s memory.

- The first mathematical model of a neural network , arguably the basis for today’s biggest advances in AI, was published in 1943 by the computational neuroscientists Warren McCulloch and Walter Pitts in their landmark paper, “A Logical Calculus of Ideas Immanent in Nervous Activity.”

- The famous Turing Test , which focused on the computer’s ability to fool interrogators into believing its responses to their questions were made by a human being, was developed by Alan Turing in 1950.

- The 1956 summer conference at Dartmouth, sponsored by the Defense Advanced Research Projects Agency or DARPA, included AI pioneers Marvin Minsky, Oliver Selfridge and John McCarthy , who is credited with coining the term artificial intelligence. Also in attendance were Allen Newell, a computer scientist, and Herbert A. Simon, an economist, political scientist and cognitive psychologist, who presented their groundbreaking Logic Theorist — a computer program capable of proving certain mathematical theorems and referred to as the first AI program.

- In the wake of the Dartmouth conference, leaders predicted that the development of a thinking machine that can learn and understand as well as a human was around the corner, attracting major government and industry support. Nearly 20 years of well-funded basic research generated significant advances in AI. Examples include the General Problem Solver (GPS) algorithm published in the late 1950s, which laid the foundations for developing more sophisticated cognitive architectures; Lisp , a language for AI programming that is still used today; and ELIZA, an early natural language processing (NLP) program that laid the foundation for today’s chatbots.

- When the promise of developing AI systems equivalent to the human brain proved elusive, government and corporations backed away from their support of AI research. This led to a fallow period lasting from 1974 to 1980 that is known as the first AI winter. In the 1980s, research on deep learning techniques and industry adoption of Edward Feigenbaum’s expert systems sparked a new wave of AI enthusiasm, only to be followed by another collapse of funding and support.

- The second AI winter lasted until the mid-1990s when groundbreaking work on neural networks and the advent of big data propelled the current renaissance of AI.

What is AI? How does AI work?

Many of the tasks done in the enterprise are not automatic but require a certain amount of intelligence. What characterizes intelligence, especially in the context of work, is not simple to pin down. Broadly defined, intelligence is the capacity to acquire knowledge and apply it to achieve an outcome; the action taken is related to the particulars of the situation rather than done by rote.

Getting a machine to perform in this manner is what is generally meant by artificial intelligence. But there is no single or simple definition of AI, as noted in this definitive report published by the National Science and Technology Council (NSTC).

“Some define AI loosely as a computerized system that exhibits behavior that is commonly thought of as requiring intelligence. Others define AI as a system capable of rationally solving complex problems or taking appropriate actions to achieve its goals in whatever real world circumstances it encounters,” the NSTC report stated.

Moreover, what qualifies as an intelligent machine, the authors explained, is a moving target: A problem that is considered to require AI quickly becomes regarded as “routine data processing” once it is solved.

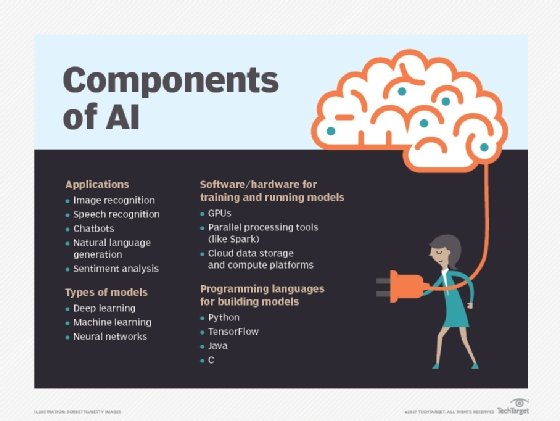

At a basic level, AI programming focuses on three cognitive skills — learning, reasoning and self-correction:

- The learning aspect of AI programming focuses on acquiring data and creating rules for how to turn data into actionable information. The rules, called algorithms , provide computing systems with step-by-step instructions on how to complete a specific task.

- The reasoning aspect involves AI’s ability to choose the most appropriate algorithm, among a set of algorithms, to use in a particular context.

- The self-correction aspect focuses on AI’s ability to progressively tune and improve a result until it achieves the desired goal.

What are the 4 types of AI?

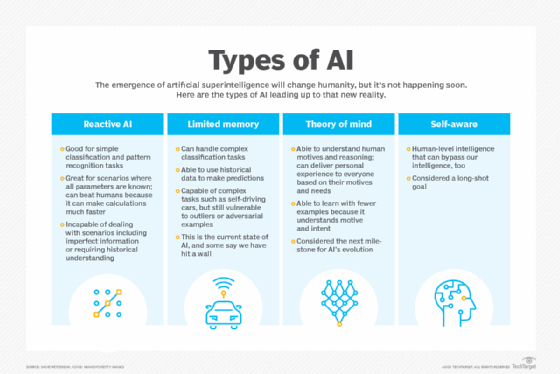

In his explanatory article on four main types of artificial intelligence , author David Petersson outlined how modern artificial intelligence evolved from AI systems capable of simple classification and pattern recognition tasks to systems capable of using historical data to make predictions. Propelled by a revolution in deep learning — i.e., AI that learns from data — machine intelligence has advanced rapidly in the 21st century, bringing us such breakthrough products as self-driving cars and virtual assistants Alexa and Siri.

The types of AI that exist today, including AI that can drive cars or defeat a world champion at the game of Go, are known as narrow or weak AI . These AI types have savant-like skill at certain tasks but lack general intelligence. The type of AI that demonstrates human-level intelligence and consciousness is still a work in progress.

Here are the four types of AI outlined in Petersson’s article and a summary of their characteristics:

Reactive AI. Algorithms used in this early type of AI lack memory and are reactive; that is, given a specific input, the output is always the same. Machine learning models using this type of AI are effective for simple classification and pattern recognition tasks. They can consider huge chunks of data and produce a seemingly intelligent output, but they are incapable of analyzing scenarios that include imperfect information or require historical understanding.

Limited memory machines. The underlying algorithms in limited memory machines are based on our understanding of how the human brain works and are designed to imitate the way our neurons connect. This type of machine deep learning can handle complex classification tasks and use historical data to make predictions; it also is capable of completing complex tasks, such as autonomous driving (Learn about the key differences between AI, machine learning and deep learning .)

Despite their ability to far outdo typical human performance in certain tasks, limited memory machines are classified as having narrow intelligence because they lag behind human intelligence in other respects. They require huge amounts of training data to learn tasks humans learn with just a few examples, and they are vulnerable to outliers or adversarial examples.

Theory of mind. This type of as-yet-unrealized AI is defined as capable of understanding human motives and reasoning and therefore able to deliver personalized results based on an individual’s motives and needs. Also referred to as artificial general intelligence (AGI), theory of mind AI can learn with fewer examples than limited memory machines; it can contextualize and generalize information, and extrapolate knowledge to a broad set of problems. Artificial emotional intelligence — or the ability to detect human emotions and empathize with people — is being developed, but the current systems do not exhibit theory of mind and are very far from self-awareness, the next milestone in the evolution of AI.

Self-aware AI, aka artificial superintelligence. This type of AI is not only aware of the mental state of other entities but is also aware of itself. Self-aware AI, or artificial superintelligence (ASI), is defined as a machine with intelligence on par with human general intelligence and in principle capable of far surpassing human cognition by creating ever more intelligent versions of itself. Currently, however, we don’t know enough about how the human brain is organized to build an artificial one that is as, or more, intelligent in a general sense.

Why is AI important in the enterprise?

According to research firm IDC, by 2025, the volume of data generated worldwide will reach 175 zettabytes (that is, 175 billion terabytes), an astounding 430% increase over the 33 zettabytes of data produced by 2018.

For companies committed to data-driven decision-making, the surge in data will be a boon. Large data sets are the raw material for yielding the in-depth business intelligence that drives improvements in existing business operations and leads to new lines of business.

Without AI, companies cannot capitalize on these vast data stores. As Cognilytica analyst Kathleen Walch explained, AI and big data play a symbiotic role in 21st-century business success: Deep learning, a subset of AI and machine learning, processes large data stores to identify the subtle patterns and correlations in big data that can give companies a competitive edge.

Simultaneously, AI’s ability to make meaningful predictions — to get at the truth of a matter rather than mimic human biases — requires not only vast stores of data but also data of high quality. Cloud computing environments have helped enable AI applications by not only providing the computational power needed to process and manage big data in a scalable and flexible architecture, but also by providing wider access to enterprise users.

Impact of AI in the enterprise

The value of AI to 21st-century business has been compared to the strategic value of electricity in the early 20th century, when electrification transformed industries like manufacturing and created new ones like mass communications. “AI is strategic because the scale, scope, complexity and the dynamism in business today is so extreme that humans can no longer manage it without artificial intelligence,” Chris Brahm, partner and director at Bain & Company, told TechTarget.

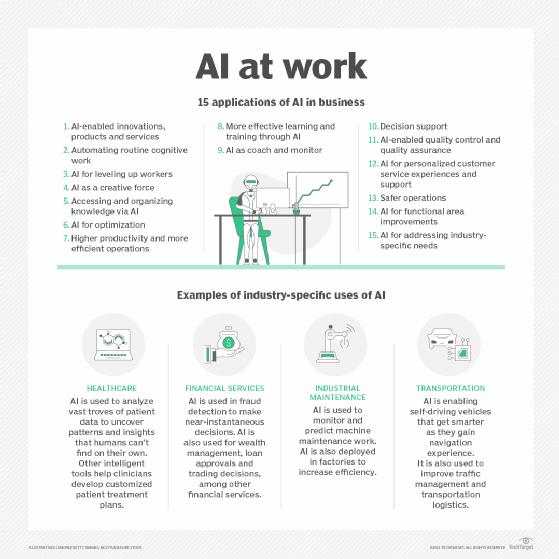

AI’s biggest impact on business in the near future stems from its ability to automate and augment jobs that today are done by humans.

Labor gains realized from using AI are expected to expand upon and surpass those made by current workplace automation tools. And by analyzing vast volumes of data, AI won’t simply automate work tasks, but will generate the most efficient way to complete a task and adjust workflows on the fly as circumstances change.

AI is already augmenting human work in many fields, from assisting doctors in medical diagnoses to helping call center workers deal more effectively with customer queries and complaints. In security, AI is being used to automatically respond to cybersecurity threats and prioritize those that need human attention. Banks are using AI to speed up and support loan processing and to ensure compliance. (See section “Current and potential use cases.”)

But AI will also eliminate many jobs done today by humans — a major concern to workers, as described in the following sections on benefits and risks of AI.

What are the benefits of AI in the enterprise?

Most companies at this juncture are looking to use AI to optimize existing operations rather than radically transform their business models. The aforementioned gains in productivity and efficiency are the most cited benefits of implementing AI in the enterprise .

Here are six additional key benefits of AI for business:

- Improved customer service. The ability of AI to speed up and personalize customer service is among the top benefits businesses expect to reap from AI, ranked No. 2 among AI payoffs in a 2019 research study by MIT Sloan Management and Boston Consulting Group.

- Improved monitoring. AI’s capacity to process data in real time means organizations can implement near-instantaneous monitoring; for example, factory floors are using image recognition software and machine learning models in quality control processes to monitor production and flag problems.

- Faster product development. AI enables shorter development cycles and reduces the time between design and commercialization for a quicker ROI of development dollars.

- Better quality. Organizations expect a reduction in errors and increased adherence to compliance standards by using AI on tasks previously done manually or done with traditional automation tools, such as extract, transform and load. Financial reconciliation is an area where machine learning, for example, has substantially reduced costs, time and errors.

- Better talent management. Companies are using enterprise AI software to streamline the hiring process, root out bias in corporate communications and boost productivity by screening for top-tier candidates. Advances in speech recognition and other NLP tools give chatbots the ability to provide personalized service to job candidates and employees.

- Business model innovation and expansion. Digital natives like Amazon, Airbnb, Uber and others certainly have used AI to help implement new business models. Traditional companies may find AI-enabled business model transformation a hard sell, according to Andrew Ng, a pioneer of AI development at Google and Baidu and currently CEO and co-founder of Landing AI. He offered a six-step playbook for getting AI off the ground at traditional companies.

What are the risks of AI?

One of the biggest risks to the effective use of AI in the enterprise is worker mistrust . Many employees fear and distrust AI or remain unconvinced of its value in the workplace.

Anxieties about job elimination are not unfounded, according to many studies. A report from the Brookings Institute, “Automation and Artificial Intelligence: How Machines Are Affecting People and Places,” estimated that some 36 million jobs “face high exposure to automation” in the next decade. The jobs most vulnerable to elimination are in office administration, production, transportation and food preparation, but the study found that by 2030, virtually every occupation will be affected to some degree by AI-enabled automation. (AI is also creating new jobs: According to Gartner , AI became a “net-positive job driver” in 2020, creating 500,000 more jobs than it eliminated; by 2025, the consultancy predicts AI will generate 2 million net new jobs.)

Without worker trust, the benefits of AI will not be fully realized, Beena Ammanath, AI managing director at Deloitte Consulting LLP, told TechTarget in an article on the top AI risks businesses must confront when implementing the technology.

“I’ve seen cases where the algorithm works perfectly, but the worker isn’t trained or motivated to use it,” Ammanath said. Consider the example of an AI system on a factory floor that determines when a manufacturing machine must be shut down for maintenance.

“You can build the best AI solution — it could be 99.998% accurate — and it could be telling the factory worker to shut off the machine. But if the end user doesn’t trust the machine, which isn’t unusual, then that AI is a failure,” Ammanath said.

As AI models become more complex, explainability — understanding how an AI reached its conclusion — will become ever harder to convey to frontline workers who need to trust the AI to make decisions.

Companies must put users first, according to the experts interviewed in this report on explainable AI techniques . Data scientists should focus on providing the information relevant to the particular domain expert, rather than getting into the weeds of how a model works. For a machine learning model that predicts the risk of a patient being readmitted, for example, the physician may want an explanation of the underlying medical reasons, while a discharge planner may want to know the likelihood of readmission.

Here is a summary of three more big AI risks discussed in the article cited above:

- AI errors. While AI can eliminate human error, problematic data, poor training data or mistakes in the algorithms can lead to AI errors. And those errors can be dangerously compounded because of the large volume of transactions AI systems typically process. “Humans might make 30 mistakes in a day, but a bot handling millions of transactions a day magnifies any error,” Bain & Company’s Brahm said.

- Unethical and unintended practices. Companies need to guard against unethical AI . Enterprise leaders are likely familiar with the reports on the racial bias baked into the AI-based risk prediction tools used by some judges to sentence criminals and decide bail. Companies must also be on the alert for unintended consequences of using AI to make business decisions. An example is a grocery chain that uses AI to determine pricing based on competition from other grocers. In poor neighborhoods where there is little or no competition, the logical recommendation might be to charge more for food, but is this the strategy the grocery chain intends?

- Erosion of key skills. This is a rarely considered but not unimportant risk of AI. In the wake of the two plane crashes involving Boeing 737 Max jets, some experts expressed concern that pilots were losing basic flying skills — or at least the ability to employ them — as the jet relied on increasing amounts of AI in the cockpit. Albeit extreme cases, these events should raise questions about key skills enterprises might want to preserve in their human workforce as AI’s use expands, Brahm said.

Current business applications of AI

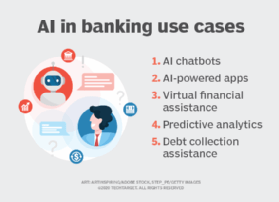

A Google search for “AI use cases” turns up millions of results, an indication of the many enterprise applications of AI . Certainly, AI use cases span industries, from financial services — an early adopter — to healthcare, education, marketing and retail . AI has made its way into every business department, from marketing, finance and HR to IT and business operations. Additionally, the use cases incorporate a range of AI applications. Among them: natural language generation tools used in customer service, deep learning platforms used in automated driving, and facial recognition tools used by law enforcement.

Here is a sampling of current AI use cases in multiple industries and business departments with links to the TechTarget articles that explain each one in depth.

Financial services. Artificial intelligence is transforming how banks operate and how customers bank. Read about how Chase Bank, JPMorgan Chase, Bank of America, Wells Fargo and other banking behemoths are using AI to improve back-office systems , automate customer service and create new opportunities.

Manufacturing. Collaborative robots, aka cobots, are working on assembly lines and in warehouses alongside humans, functioning as an extra set of hands; factories are using AI to predict maintenance needs; and machine learning algorithms detect buying habits to predict product demand for production planning. Read about these AI implementations and potential new AI applications in ” 10 AI use cases in manufacturing .”

Agriculture. The $5 trillion agriculture industry is using AI to yield healthier crops, reduce workloads and organize data. Read Cognilytica analyst Kathleen Walch’s deep dive into agriculture’s use of AI technology .

Law. The document-intensive legal industry is using AI to save time and improve client service. Law firms are deploying machine learning to mine data and predict outcomes; they are also using computer vision to classify and extract information from documents, and NLP to interpret requests for information. Watch Vince DiMascio talk about how his team is applying AI and robotic process automation at the large immigration law firm where he serves as CIO and CTO.

Education. In addition to automating the tedious process of grading exams, AI is being used to assess students and adapt curricula to their needs. Listen to this podcast with Ken Koedinger, professor of human-computer interaction and psychology at Carnegie Mellon University’s School of Computer Science, on how machine learning provides insight into how humans learn and is paving the way for personalized learning.

IT service management. IT organizations are using natural language processing to automate user requests for IT services. They are applying machine learning to ITSM data to gain a richer understanding of their infrastructure and processes. Dig deeper into how organizations are using AI to optimize IT services in this review of 10 AI use cases in ITSM .

How have AI use cases evolved?

The range of AI use cases in the enterprise is not only expanding but also evolving in the face of current market uncertainty. As companies continue to grapple with the implications of the coronavirus pandemic, AI will help companies understand how they need to pivot to stay relevant and profitable, said Arijit Sengupta, CEO of Aible, an AI platform provider.

“The most significant use cases are going to center on scenario planning, hypothesis testing and assumption testing,” Sengupta told TechTarget.

But model building will have to become nimbler and more iterative to get the most out of AI applications. Predictive models, for example, could be pushed out to salespeople, who in turn become critical players in feeding the models real-time empirical data for ongoing analysis. “For any use case, getting end-user feedback is critical in uncertain times, because end users will know things that haven’t surfaced in the data yet,” Sengupta said.

For a look at how AI use cases are evolving, read reporter George Lawton’s informative article on eight emerging AI use cases in the enterprise . The list includes the use of digital twin technology in business — other than for managing hard assets, such as heavy machinery — and increased investment in AI and machine learning in supply chain management.

AI adoption in the enterprise

Recent studies on AI adoption in the enterprise assert that AI deployments are rising. By 2022, for example, Gartner projected that the average number of AI projects per company will grow to 35, a 250% increase over the average of 10 projects in 2020.

But whether the growth in AI adoption is as strong as anticipated or will pan out as predicted is open to debate. As Gartner noted, a 10-percentage-point gain in enterprise AI adoption to 14% in 2019 from 4% the previous year fell well short of the firm’s projected 21-point increase.

IBM’s “Global AI Adoption Index 2021,” conducted by Morning Consult on behalf of IBM, found that while AI adoption was nearly flat between 2020 and 2021, “significant investments are planned.” Nearly three-quarters of companies are now using AI (31%) or exploring its use (43%), according to the study. IT professionals responding to the IBM survey cited changing business needs in the wake of the pandemic as a driving factor in the adoption of AI: 43% said their companies have accelerated AI rollouts as a result of COVID-19. Other findings of note from the report include the following:

- Trustworthy and explainable AI is critical to business. Of the businesses using AI, 91% said their ability to explain how AI arrived at a decision is critical.

- The ability to access data anywhere is key for increasing AI adoption. Almost 90% of IT professionals said being able to run their AI projects wherever the data resides is key to the technology’s adoption.

- Natural language processing is at the forefront of recent adoption. Almost half of businesses today are now using applications powered by NLP, and 25% of businesses plan to begin using NLP technology over the next 12 months. Customer service is the top NLP use case.

- The top three barriers to AI adoption. The survey found the main obstacles businesses face when adopting AI are limited AI expertise or knowledge (39%), increasing data complexity and data silos (32%), and lack of tools/platforms for developing AI models (28%).

Indeed, enterprises still face many challenges in deploying AI.

Companies that have deployed AI are realizing that figuring out how to do AI is not the same as using AI to make money . As Lawton chronicled in an in-depth report on “last-mile” delivery problems in AI , businesses are finding it much harder to weave AI technologies into existing business processes than to build or buy the complex AI models that promise to optimize those processes. Ian Xiao, manager at Deloitte Omnia AI, estimated that most companies deploy between 10% and 40% of their machine learning projects. Among the challenges holding back companies from integrating AI into their business processes are the following:

- lack of the last-mile infrastructure, such as robotic process automation, integration platform as a service, and low-code platforms needed to connect AI into the business;

- lack of domain experts who can assess what AI is good for — specifically, figuring out which decision-making elements of a process can be automated by AI and how to then reengineer the process; and

- lack of feedback on machine learning models, which need to be updated to reflect new data.

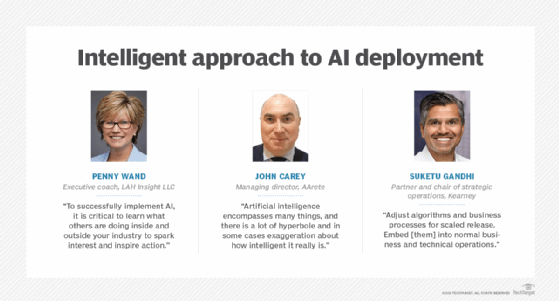

Industry best practices for deploying AI, however, are emerging, as described in a TechTarget article on criteria for success in AI , highlighting advice from practitioners from Shell and Uber, among others, in charge of big AI initiatives. The most critical factor cited by all the data scientists profiled was the need to work closely with the company’s subject matter experts. People with in-depth knowledge of the subject matter, they stressed, provide the context and nuance that are hard for deep learning tools to tease apart on their own.

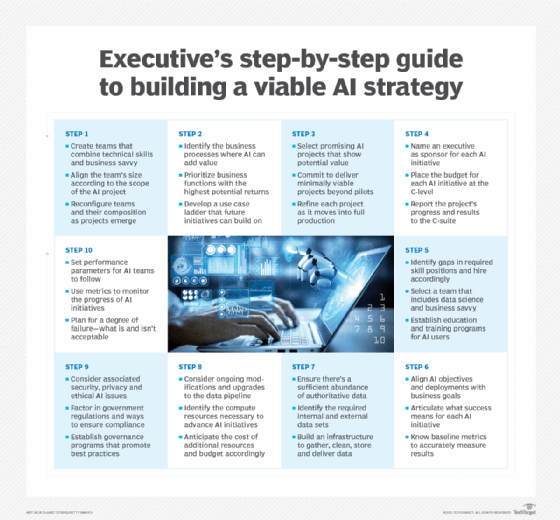

Steps for implementing artificial intelligence in the enterprise

AI comes in many forms: machine learning, deep learning, predictive analytics, NLP, computer vision and automation. As explained in this in-depth tip on implementing AI in your business , deriving value from AI’s many technologies requires companies to address issues related to people, processes and technology.

As with any emerging technology, the rules of implementation are still being written. Industry leaders in AI emphasize that an experimental mindset will yield better results than a “big bang approach.” Start with a hypothesis, followed by testing and rigorous measurement of results — and iterate. Here is a list of the 10 steps laid out in the article cited above:

- Build data fluency.

- Define your primary business drivers for AI.

- Identify areas of opportunity.

- Evaluate your internal capabilities.

- Identify suitable candidates.

- Pilot an AI project.

- Establish a baseline understanding.

- Scale incrementally.

- Bring overall AI capabilities to maturity.

- Continuously improve AI models and processes.

AI trends: Giant chips, neuro-symbolic AI, avocado armchairs

It is hard to overstate how much development is being done on artificial intelligence by vendors, governments and research institutions — and how quickly the field is changing.

On the hardware side, startups are developing new and different ways of organizing memory, compute and networking that could reshape the way leading enterprises design and deploy AI algorithms. At least one vendor has begun testing a single chip about the size of an iPad that, as detailed in this report on AI giant chips , can move data around thousands of times faster than existing AI chips.

Even accepted wisdom — such as the superiority of GPUs vs. CPUs for running AI workloads — is being challenged by leading AI researchers. A team at Rice University, for example, is working on a new category of algorithms, called SLIDE (Sub-LInear Deep learning Engine), that promises to make CPUs practical for more types of algorithms. CPUs, of course, are commodity hardware: present everywhere and cheap compared to GPUs. “If we can design algorithms like SLIDE that can run AI directly on CPUs efficiently, that could be a game changer,” Anshumali Shrivastava, assistant professor in the department of computer science at Rice, told TechTarget.

On the software side, scientists in academia and industry are pushing the limits of current applications of artificial intelligence. In the feverish quest to develop sentient machines that rival human general intelligence, even traditional antagonists in AI are burying the hatchet.

Proponents of symbolic AI — methods that are based on high-level symbolic representations of problems, logic and search — are joining forces with proponents of data-intensive neural networks. The aim is to develop AI that is good at capturing the compositional and causal structures found by symbolic AI , and that is also capable of the image recognition and natural language processing feats performed by deep neural networks. This neuro-symbolic approach would allow machines to reason about what they see, representing a milestone in the evolution of AI.

“Neuro-symbolic modeling is one of the most exciting areas in AI right now,” Brenden Lake, assistant professor of psychology and data science at New York University, told TechTarget.

Meanwhile, AI developments that seemed bleeding edge just a few years ago are becoming institutionalized — by vendors incorporating these advances into commercial products, open source communities, and technology giants like Google and Facebook. Some of the most important new concepts and improvements are outlined in this roundup of 2022 trends in AI . The following are some of the trends on the list:

- AutoML. Automated machine learning is getting better at labeling data and automatic tuning of neural net architectures.

- Multi-modal learning. AI is getting better at supporting multiple modalities such as text, vision, speech and IoT sensor data in a single machine learning model.

- Tiny ML. These are AI and machine learning models that run on hardware-constrained devices, such as microcontrollers used to power cars, refrigerators and utility meters.

- AI-enabled conceptual design. AI is being trained to play a role in fashion, architecture, design and other creative fields. New AI models such as DALL·E, for example, are able to generate conceptual designs of something entirely new when given a simple description: e.g., “an armchair in the shape of an avocado.”

- Quantum ML. Beyond practical reach, but the promise is getting more real as Microsoft, Amazon and IBM make quantum computing resources and simulators accessible via the cloud.

The future of artificial intelligence

One of the characteristics that has set us humans apart over our several-hundred-thousand-year history on Earth is a unique reliance on tools and a determination to improve upon the tools we invent. Once we figured out how to make AI work, it was inevitable that AI tools would become increasingly intelligent. What is the future of AI? It will be intertwined with the future of everything we do. Indeed, it will not be long before AI’s novelty in the realm of work will be no greater than that of a hammer or plow.

However, AI-infused tools are qualitatively separate from all the tools of the past (which include beasts of burden as well as machines). We can talk to them, and they talk back. Because they understand us, they have rapidly invaded our personal space, answering our questions, solving our problems and, of course, doing ever more of our work.

This synergy is not likely to stop anytime soon. Arguably, the very distinction between what is human intelligence and what is artificial will probably evaporate. This blurring between human and artificial intelligence is occurring because of other trends in technology, which incidentally have been spurred by AI. These include brain-machine interfaces that skip the requirement for verbal communication altogether, robotics that give machines all the capabilities of human action and, perhaps most exciting, a deeper understanding of the physical basis of human intelligence thanks to new approaches to unravel the wiring diagrams of actual brains . Ultimately, our future then is one in which the enhancement of intelligence may be bidirectional, making both our machines — and us — more intelligent.

Latest AI news and trends

Examining AI pioneer Geoffrey Hinton’s fears about AI

The ‘godfather of AI’ claims AI will be misused for political gain and to manipulate humans. His resignation from Google came weeks after tech leaders called for an ‘AI pause.’

AI ‘brain decoder’ system translates human brain activity

Researchers have developed an artificial intelligence-based ‘brain decoder’ that can translate brain activity into a continuous stream of text.

Tech jobs are on the rise, but soft skills are most coveted

Research by the World Economic Forum has found employers believe artificial intelligence (AI) and machine learning specialists will be the fastest growing roles between now and 2027.

IBM launches Watsonx, a new generative AI platform

With the introduction of its new Watsonx platform, IBM is rebranding its more than two-decades-old AI system, Watson.

Google’s upgrade of AI chatbot Bard could change search

The tech giant added new features and opened Bard to all in 180 countries and territories. While its new chatbot seems like an experiment, it may herald the next search experience.