Jeff Dean’s personal review of Google AI 2019: an average of 2 papers per day, covering 16 major directions, and one article brings together important open source algorithms

Guo Yipu, the Thirteenth Border Policy of the Qianming Dynasty, came from Aofei Temple

Qubit report | Public account QbitAI

Another year, Jeff Dean, on behalf of Google AI, summarizes the major trends in AI over the past year.

This is my brother-in-law’s routine as the head of Google AIannual report, it is also a display of muscle from the world’s largest manufacturer of AI and even cutting-edge technology.

He said that the past 2019 was a very exciting year.

Both academic and application fields are still flourishing, and open source and new technologies are advancing simultaneously.

Starting from basic research, to the application of technology in emerging fields, and then looking forward to 2020.

Although the reporting format has not changed, artificial intelligence technology has taken another big step forward.

Jeff Dean summed it up16 major aspectsAI achievements, and revealed that the number of AI papers published throughout the year reached754 articles, an average of 2 papers are published every day.

Covering AutoML, machine learning algorithms, quantum computing, perception technology, robots, medical AI, AI for good...

The piles and piles not only promote the role of AI in all aspects of society at present, but are also a small demonstration of future trends.

It is no exaggeration to say that if you want to know the progress of AI technology in 2019, it is best to read Jeff’s summary; if you want to know where AI will go in 2020, you can also benefit a lot from reading Jeff’s article.

For the convenience of reading, we first compiled a small table of contents for you:

machine learning algorithm:Understanding the dynamic training properties of neural networks

AutoML:Continue to pay attention to realize machine learning automation

natural language understanding: Combine multiple methods and tasks to improve technical level

machine perception: Deeper understanding and perception of images, videos, and environments

robot technology: Self-supervised training, publishing robot test benchmarks

Quantum computing: First achieved quantum superiority

Applications of AI in other disciplines: From fly brains to mathematics, as well as chemical molecular research and artistic creation

Mobile AI application: Locally deployed speech and image recognition models, as well as stronger translation, navigation and photography

health and medical: Already used in clinical diagnosis of breast cancer and skin diseases

AI assists people with disabilities: Using image recognition and voice transcription technology to benefit disadvantaged groups

AI promotes social welfare: Forecasting floods, protecting animals and plants, teaching children literacy and mathematics, and spending more than 100 million on 20 public welfare projects

Developer tools build and benefit the researcher community: TensorFlow ushered in a comprehensive upgrade

Open 11 data sets: From reinforcement learning to natural language processing to image segmentation

Global expansion of DIM Research and Google Research: Publish a large number of papers and invest a lot of resources to support teachers, students and researchers in various fields to conduct research.

Artificial Intelligence Ethics: Promote research progress in artificial intelligence in terms of fairness, privacy protection, and explainability

Looking ahead to 2020 and beyond: The deep learning revolution will continue to reshape how we think about computing and computers.

machine learning algorithm

In 2019, Google conducted research in many different areas of machine learning algorithms and methods.

A major focus is on understanding neural networksdynamic trainingnature.

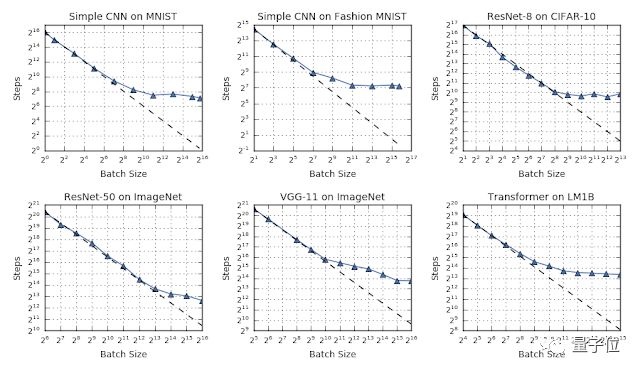

In the following study, the researchers' experimental results show that scaling the amount of data parallelism can make the model converge faster and more efficiently.

Paper address:

https://arxiv.org/pdf/1811.03600.pdf

Compared to data parallelism, model parallelism can be an efficient way to scale models.

GPipe is a library that makes model parallelization more efficient:

While one part of the entire model is processing certain data, other parts can do other work and calculate different data.

Such pipeline methods can be combined together to simulate more efficient batch sizes.

GPipe library address:

https://ai.googleblog.com/2019/03/introducing-gpipe-open-source-library.html

Machine learning models are very effective when they are able to take raw input data and learn "disentangled" high-level representations.

These representations distinguish different kinds of examples by the properties that the user wants the model to be able to distinguish.

Advances in machine learning algorithms are primarily intended to encourage the learning of better representations that can be generalized to new examples, problems, and domains.

In 2019, Google studied this issue in different contexts:

For example, they examined which properties influence representations learned from unsupervised data to better understand what factors contribute to good representation and effective learning.

blog address:

https://ai.googleblog.com/2019/04/evaluating-unsupervised-learning-of.html

Google shows that it is possible to use the statistic of the margin distribution to predict the generalization gap, which helps to understand which model generalizes most effectively.

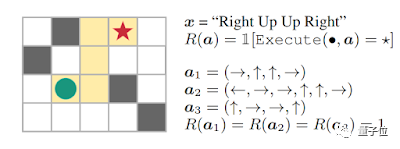

In addition to this, Off-Policy classification is also studied in the context of reinforcement learning to better understand which models may generalize best.

blog address:

http://ai.googleblog.com/2019/07/predicting-generalization-gap-in-deep.html

Methods of specifying reward functions for reinforcement learning are investigated, allowing learning systems to learn more directly from real targets.

blog address:

http://ai.googleblog.com/2019/02/learning-to-generalize-from-sparse-and.html

AutoML

Google continues to pay attention to AutoML in 2019.

This approach can automate many aspects of machine learning and often achieve better results in certain types of machine learning meta-decisions, such as:

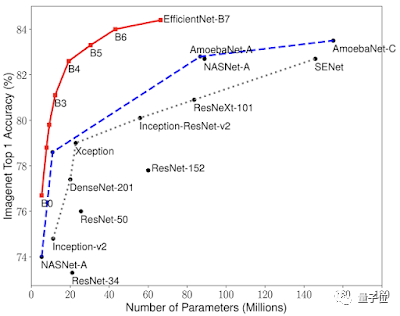

Google demonstrated how to use neural architecture search techniques to achieve better results on computer vision problems, achieving 84.4% accuracy on ImageNet with 8x fewer parameters than the previous best model.

blog address:

http://ai.googleblog.com/2019/05/efficientnet-improving-accuracy-and.html

Google demonstrated a neural architecture search method that shows how to find efficient models suitable for specific hardware accelerators. This provides a high-precision, low-computation operation model for mobile devices.

blog address:

http://ai.googleblog.com/2019/08/efficientnet-edgetpu-creating.html

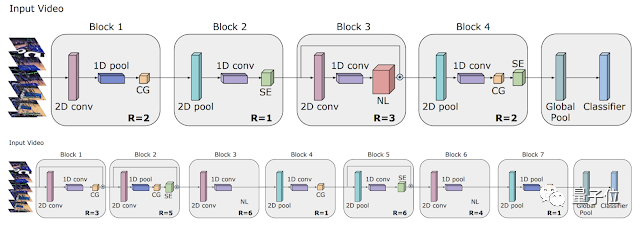

Google shows how it extends its AutoML work to the domain of video models, finding architectures that achieve state-of-the-art results, and lightweight architectures that match the performance of hand-crafted models.

The result is a 50-fold reduction in computational effort.

blog address:

http://ai.googleblog.com/2019/10/video-architecture-search.html

Google developed AutoML technology for tabular data and collaborated to release the technology as a new product, Google Cloud AutoML Tables.

blog address:

http://ai.googleblog.com/2019/05/an-end-to-end-automl-solution-for.html

We show how to find interesting neural network architectures, making structure search more computationally efficient, without using any training steps to update the weights of the model being evaluated.

blog address:

http://ai.googleblog.com/2019/08/exploring-weight-agnostic-neural.html

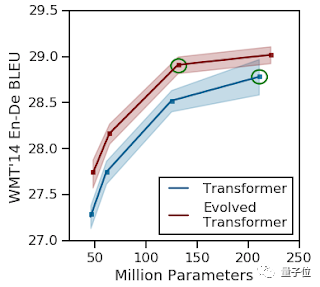

Explores the architecture of discovery NLP tasks. The performance of these tasks is significantly better than the ordinary Transformer model, and the computational cost is greatly reduced.

blog address:

http://ai.googleblog.com/2019/06/applying-automl-to-transformer.html

Research demonstrates that automatic learning data augmentation methods can be extended to speech recognition models.

Compared with existing human ML-expert driven data augmentation methods, significantly higher accuracy can be achieved with less data.

blog address:

http://ai.googleblog.com/2019/04/specaugment-new-data-augmentation.html

Launched the first speech application using AutoML for keyword recognition and spoken language recognition.

In experiments, better models than human designs were found: more efficient and performing better.

blog address:

https://www.isca-speech.org/archive/Interspeech_2019/abstracts/1916.html

natural language understanding

Models for natural language understanding, translation, natural dialogue, speech recognition, and related tasks have made significant progress over the past few years.

One theme that Google is working on in 2019 is:

Improve your skills by combining various modalities or tasks to train more powerful models.

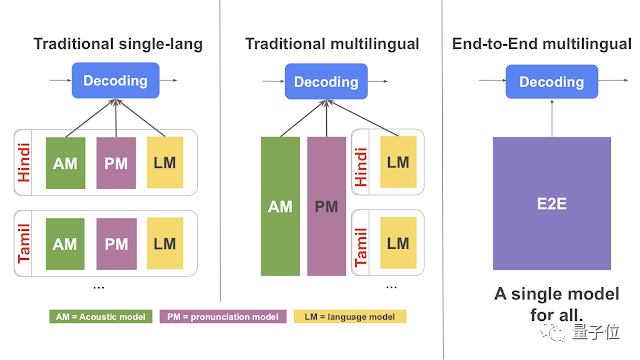

For example, using only 1 model for translation training between 100 languages (rather than using 100 different models) significantly improves translation quality.

blog address:

http://ai.googleblog.com/2019/10/exploring-massively-multilingual.html

Shows how combining speech recognition and language models, and training the system on multiple languages, can significantly improve speech recognition accuracy.

blog address:

http://ai.googleblog.com/2019/09/large-scale-multilingual-speech.html

Research demonstrates that it is possible to train a joint model for speech recognition, translation, and text-to-speech generation tasks.

And it also has certain advantages, such as retaining the speaker's voice in the generated translated audio, and a simpler overall learning system.

blog address:

http://ai.googleblog.com/2019/05/introducing-translatotron-end-to-end.html

Research shows how to combine many different objectives to produce significantly better models at semantic retrieval.

For example, ask in GoogleTalk to Books, "What scent evokes memories?"

The result is, "For me, the scent of jasmine and the aroma of baking sheets reminds me of my carefree childhood."

blog address:

http://ai.googleblog.com/2019/07/multilingual-universal-sentence-encoder.html

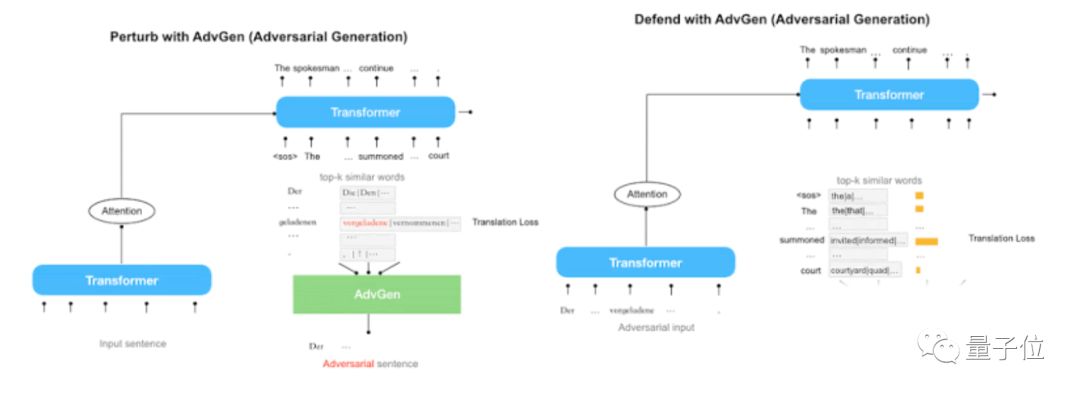

Shows how adversarial training procedures can be used to significantly improve the quality and robustness of language translations.

blog address:

http://ai.googleblog.com/2019/07/robust-neural-machine-translation.html

With the development of models based on seq2seq, Transformer, BERT, Transformer-XL and ALBERT, Google's language understanding technology capabilities continue to improve. And has been applied to many core products and functions.

In 2019, the application of BERT to core search and ranking algorithms resulted in the largest improvement in search quality in the past five years (and one of the largest ever).

machine perception

Models for better understanding static images have made significant progress over the past decade.

What follows is Google’s main research in this field over the past year.

Including a deeper understanding of images and videos, as well as a perception of life and the environment, specifically:

Investigating finer-grained visual understanding in shots to support more powerful visual search.

blog address:

https://www.blog.google/products/search/helpful-new-visual-features-search-lens-io/

Demonstrating the Nest Hub Max’s smart camera features, such as quick gestures, face matching, and smart video call framing.

blog address:

https://blog.google/products/google-nest/hub-max-io/

Investigating better video depth prediction models.

blog address:

https://ai.googleblog.com/2019/05/moving-camera-moving-people-deep.html

Study using temporal period consistency to learn better representations for fine-grained temporal understanding of videos.

blog address:

https://ai.googleblog.com/2019/08/video-understanding-using-temporal.html

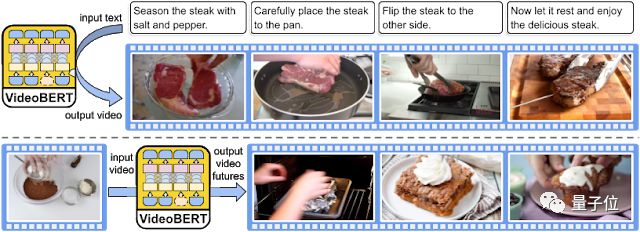

Learning temporally consistent representations in text, speech, and video with unlabeled videos.

blog address:

https://ai.googleblog.com/2019/09/learning-cross-modal-temporal.html

It can also predict future visual input by observing the past.

blog address:

https://ai.googleblog.com/2019/03/simulated-policy-learning-in-video.html

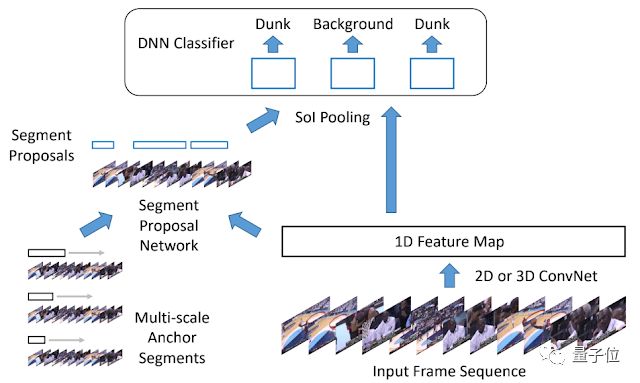

and demonstrated that the model can better understand action sequences in videos.

blog address:

https://ai.googleblog.com/2019/04/capturing-special-video-moments-with.html

robot technology

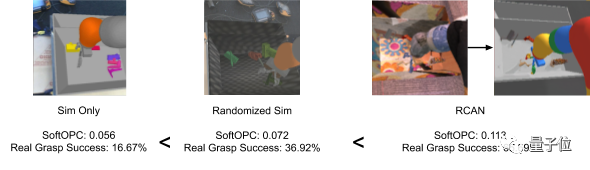

The application of machine learning in robot control is an important research area at Google. Google believes that this is an important tool that enables robots to operate effectively in complex real-world environments (such as daily homes and businesses).

Google’s work in robotics in 2019 includes:

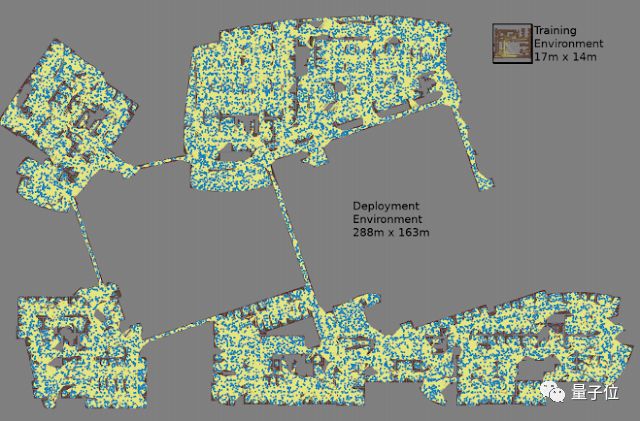

1. In Remote Robot Navigation through Automated Reinforcement Learning, Google shows how to combine reinforcement learning with remote projects to enable robots to more effectively navigate complex environments (such as Google office buildings).

Related Links:

http://ai.googleblog.com/2019/02/long-range-robotic-navigation-via.html

2. inPlaNetIn , Google demonstrated how to effectively learn a model of the world from only images, and how this model can be used to complete tasks with fewer learning times.

Related Links:

http://ai.googleblog.com/2019/02/introducing-planet-deep-planning.html

3. inTossingBotOn , Google unified the laws of physics and deep learning, allowing the robot to learn intuitive physical principles through experiments, and then throw objects into the box according to the learned rules.

Related Links:

http://ai.googleblog.com/2019/03/unifying-physics-and-deep-learning-with.html

4. In the research of Soft Actor-Critic, Google proved that the way to train the reinforcement learning algorithm can be achieved by maximizing the expected reward or by maximizing the entropy of the strategy.

This can help the robot learn faster and be more robust to changes in the environment.

Related Links:

http://ai.googleblog.com/2019/01/soft-actor-critic-deep-reinforcement.html

5. Google has also developed a self-supervised learning algorithm for robots, allowing robots to learn to assemble objects by decomposing them in a self-supervised manner. This shows that robots, like children, can learn from disassembly.

Related Links:

http://ai.googleblog.com/2019/10/learning-to-assemble-and-to-generalize.html

6. Finally, Google also launched a benchmark test for low-cost robotsROBEL, which is an open source platform for low-cost robots, helping other developers develop robot hardware faster and more conveniently.

Related Links:

http://ai.googleblog.com/2019/10/robel-robotics-benchmarks-for-learning.html

Quantum computing

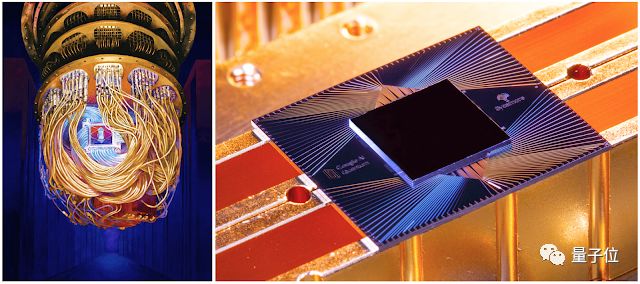

In 2019, Google achieved major results in quantum computing, demonstrating to the world for the first time the superiority of capacity: in a computing task, quantum computers are far faster than classical computers.

A quantum computer can complete a task that originally took 10,000 years for a classical computer to complete in just 200 seconds. This research appeared on the cover of Nature magazine on October 24 this year.

△ Google's Sycamore processor for quantum computing

Google CEO Pichai said: "Its significance is like the first rocket successfully breaking away from the gravity of the earth and flying to the edge of space." Quantum computers will play an important role in fields such as materials science, quantum chemistry and large-scale optimization.

Google is also working to make quantum algorithms easier to express and control hardware, and Google has found ways to use classical machine learning techniques in quantum computing.

Applications of AI in other disciplines

Google has published many papers on the application of artificial intelligence and machine learning in other scientific fields, mainly on multi-organization collaboration.

Proceedings:

https://research.google/pubs/?area=general-science

This year’s highlights include:

The interactive automatic 3D reconstruction of the fly brain uses a machine learning model to carefully map each neuron of the fly brain. Jeff Dean said this is a milestone in mapping the structure of the fly brain.

Related blogs:

https://ai.googleblog.com/2019/08/an-interactive-automated-3d.html

In learning better simulation methods for partial differential equations, Google uses machine learning to accelerate partial differential equation calculations, which are also at the heart of fundamental computing problems such as climate change, fluid dynamics, electromagnetism, heat conduction, and general relativity.

△ Simulation of two solutions to Burgers equation

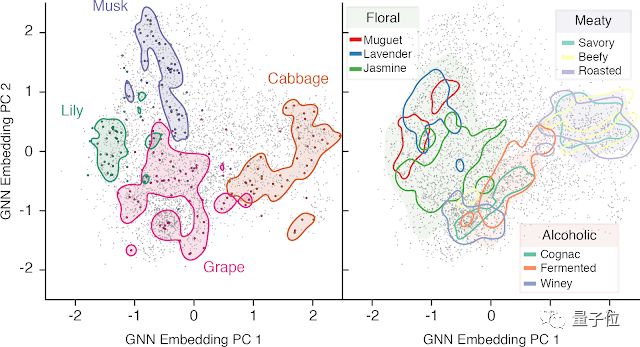

Google also uses machine learning models to judge smells and GNN to judge molecular structures to predict what it smells like.

Related reports:

Google creates AI perfumer:One look at the molecular structure and you know what it smells like

Also in chemistry, Google has also built a reinforcement learning framework to optimize molecules.

Related papers:

https://www.nature.com/articles/s41598-019-47148-x

In terms of artistic creation, GoogleAI has made more efforts, such as the artistic expression of AI+AR.

https://www.blog.google/outreach-initiatives/arts-culture/how-artists-use-ai-and-ar-collaborations-google-arts-culture/

Use machines to re-choreograph dances:

https://www.blog.google/technology/ai/bill-t-jones-dance-art/

New exploration of AI composition:

https://www.blog.google/technology/ai/behind-magenta-tech-rocked-io/

It also extended a fun AI composition Doodle:

https://www.blog.google/technology/ai/honoring-js-bach-our-first-ai-powered-doodle/

Mobile AI application

A lot of what Google does is to use machine learning to give new capabilities to mobile phones. These models can be run on mobile phones. Even if airplane mode is turned on, these functions can still be used.

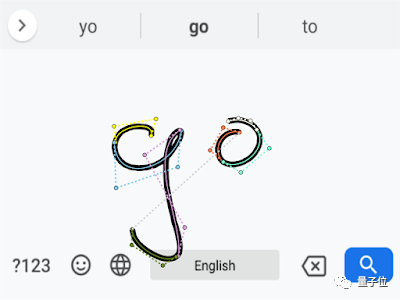

Now, mobile phone speech recognition models, visual models, and handwriting recognition models have all been implemented.

Related blogs:

Speech Recognition

https://ai.googleblog.com/2019/03/an-all-neural-on-device-speech.html

visual model

https://ai.googleblog.com/2019/11/introducing-next-generation-on-device.html

Handwriting recognition model

https://ai.googleblog.com/2019/03/rnn-based-handwriting-recognition-in.html

Jeff Dean said this paves the way for more powerful new features.

In addition, Google’s highlights on mobile phones this year include:

Live Caption function, it can automatically add subtitles to videos played by any application on the phone.

Related blogs:

https://ai.googleblog.com/2019/10/on-device-captioning-with-live-caption.html

The Recorder app allows you to search for content in audio recorded on your phone.

Related blogs:

https://ai.googleblog.com/2019/12/the-on-device-machine-learning-behind.html

The photo translation function of Google Translate has also been upgraded, adding support for multiple languages such as Arabic, Hindi, Malay, Thai and Vietnamese, and not only English and other language translations, but also other languages other than English. Language translation is also possible, and it can also automatically find where the text in the camera frame is.

Related blogs:

https://www.blog.google/products/translate/google-translates-instant-camera-translation-gets-upgrade/

A facial enhancement API has also been released in ARCore to help you achieve real-time AR gameplay.

Facial enhancement API:

https://developers.google.com/ar/develop/java/augmented-faces/

There is also mobile gesture recognition. Once this is done, you can perform gesture interaction.

Related reports:

Google's open source gesture recognizer can be used on mobile phones, runs smoothly, and has a ready-made app, but it was broken by us.

RNN was also used to improve handwriting input recognition on mobile phone screens.

Related blogs:

https://ai.googleblog.com/2019/03/rnn-based-handwriting-recognition-in.html

In terms of navigation and positioning, GPS is often only a rough positioning, but AI can play a key role.

Combined with the data of Google Street View, if you hold the phone and turn it around, the phone will become a friend who knows the way, pointing out to you according to the street view and the map: which building is this, which street is this, which south is this? This is north, you should go this way.

Related blogs:

https://ai.googleblog.com/2019/02/using-global-localization-to-improve.html

In addition, in order to ensure user privacy, Google has also been studying federated learning. The following paper is an article on the progress of federated learning transcribed by the Google team in 2019:

https://arxiv.org/abs/1912.04977

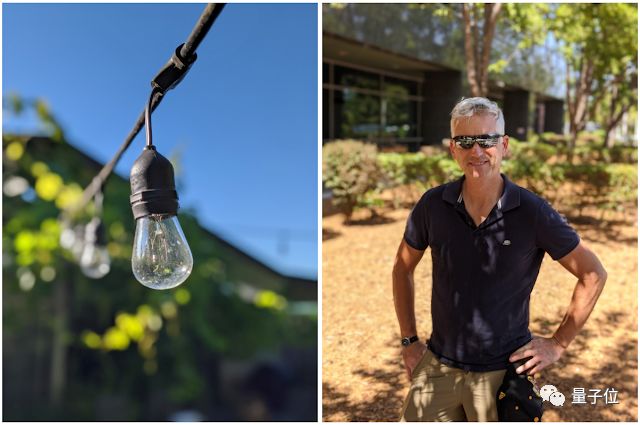

There is also the cliché of taking pictures with mobile phones. In 2019, Google improved the ability of taking selfies with mobile phones.

Related blogs:

https://ai.googleblog.com/2019/04/take-your-best-selfie-automatically.html

Background blur and portrait mode also received improvements in 2019.

Related blogs:

https://ai.googleblog.com/2019/12/improvements-to-portrait-mode-on-google.html

The night scene challenge of photographing stars has also improved tremendously, and I have also published a paper on SIGGRAPH Asia.

Related blogs:

https://ai.googleblog.com/2019/11/astrophotography-with-night-sight-on.html

Related papers:

https://arxiv.org/abs/1905.03277

https://arxiv.org/abs/1910.11336

health and medical

2019 is the first full year for the Google Health team.

At the end of 2018, Google reorganized the Google Research health team, Deepmind Health and health-related hardware departments to create a new Google Health team.

1. Google has made many achievements in the diagnosis and early detection of diseases:

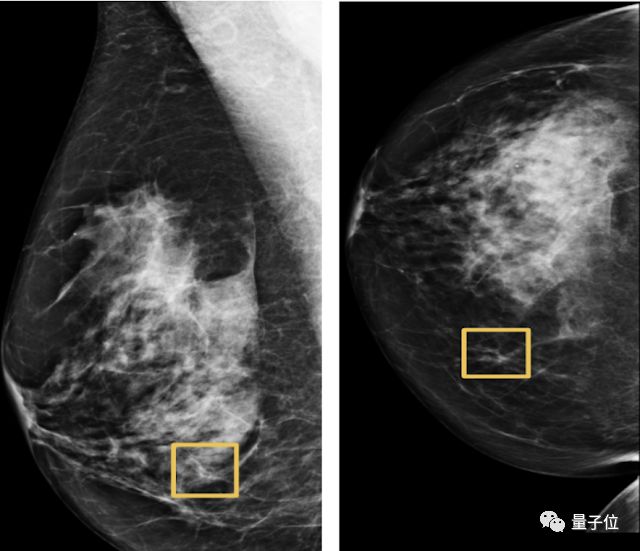

Breast cancer is detected using deep learning models with higher accuracy than human experts, reducing false positive and false negative cases in diagnosis. This research was just published in Nature magazine not long ago.

Related Links:

In addition, Google has also made some new achievements in diagnosing skin diseases, predicting acute kidney injury, and detecting early lung cancer.

2. Google combines machine learning with other technologies and uses them in other medical technologies, such as adding enhanced display technology to microscopes to help doctors quickly locate lesions.

Related Links:

Google has also built a human-centered similar image search tool for pathologists, allowing examination of similar cases to help doctors make more effective diagnoses.

AI assists people with disabilities

AI is becoming more and more closely related to our lives. Over the past year, Google has used AI to help us in our daily lives.

We can easily see beautiful images, hear favorite songs, or talk to loved ones. However, more than a billion people around the world are unable to understand the world in these ways.

Machine learning technology can serve people with disabilities by converting these audiovisual signals into other signals. The AI assistant technologies provided by Google include:

Lookout helps people who are blind or have low vision recognize information about their surroundings.

real-time transcription technologyLive TranscribeHelps people who are deaf or hard of hearing to quickly convert speech into text.

Related Links:

Project Euphonia enables personalized speech-to-text conversion. This research improves the accuracy of automatic speech recognition for people with conditions such as ALS that cause slurred speech.

There is also a Parrotron project that also uses end-to-end neural networks to help improve communication, but the research focus is on speech-to-speech conversion.

For blind and partially sighted people, Google uses AI technology to generate descriptions of images. Chrome can now automatically create descriptive content when a screen reader encounters an image or graphic without a description.

Lens for Google Go, a tool for reading text in audio form, greatly helps illiterate users obtain information in the world of words.

AI promotes social welfare

Jeff Dean said that machine learning has great significance in solving many major social problems. Google has been making efforts in some social problem areas and is committed to allowing others to use creativity and skills to solve these problems.

For example, floods affect hundreds of millions of people every year. Google uses machine learning, computing and better databases to make flood predictions and send alerts to millions of people in affected areas.

They even held a workshop and hired many researchers to specifically solve this problem.

Related blogs:

https://www.blog.google/technology/ai/tracking-our-progress-on-flood-forecasting/

https://ai.googleblog.com/2019/09/an-inside-look-at-flood-forecasting.html

https://ai.googleblog.com/2019/03/a-summary-of-google-flood-forecasting.html

In addition, Google has also done some work related to machine learning and animal and plant research.

They worked with seven wildlife conservation organizations to use machine learning to help analyze photo data of wildlife and find where their colonies are.

Related blogs:

https://www.blog.google/products/earth/ai-finds-where-the-wild-things-are/

Google is also working with the National Oceanic and Atmospheric Administration to use underwater sound data to determine the location of whale populations.

Related blogs:

https://www.blog.google/technology/ai/pattern-radio-whale-songs/

Google has released a set of tools to use machine learning to study biodiversity.

Related blogs:

A New Workflow for Collaborative Machine Learning Research in Biodiversity

https://ai.googleblog.com/2019/10/a-new-workflow-for-collaborative.html

They also held a Kaggle competition to use computer vision to classify various diseases on cassava leaves. Cassava is the second largest source of carbohydrates in Africa, and cassava diseases affect people's video safety issues.

https://www.kaggle.com/c/cassava-disease

Google Earth's Timelapse function has also been updated, and you can even see population flow and migration data from here.

Related blogs:

https://ai.googleblog.com/2019/06/an-inside-look-at-google-earth-timelapse.html

https://ai.googleblog.com/2019/11/new-insights-into-human-mobility-with.html

For education, Google has made a Bolo application with speech recognition technology to guide children to learn English. This application is deployed locally and can run offline. It has helped 800,000 Indian children become literate, and the children have read a total of 1 billion words. In pilot projects in 200 villages in India, the reading ability of 64% children has improved.

It’s like a Google version of English Liulishuo.

Related blogs:

https://www.blog.google/technology/ai/bolo-literacy/

In addition to literacy, there are also more complex study subjects such as mathematics and physics. Google made the Socratic app to help high school students learn math.

In addition, in order to let AI play a greater role in public welfare, Google held the AI Impact Challenge, which collected more than 2,600 proposals from 119 countries.

In the end, 20 proposals that could solve major social and environmental issues stood out. Google invested US$25 million (more than 170 million yuan) in funding for these proposals and made some achievements, including:

Médecins Sans Frontières (MSF) has created a free mobile app that uses image recognition tools to help doctors in clinics in disadvantaged areas analyze antibacterial images and provide recommendations on what drugs to use for patients. This project has been piloted in Jordan.

Médecins Sans Frontières project coverage:

https://www.doctorswithoutborders.org/what-we-do/news-stories/news/msf-receives-google-grant-develop-new-free-smartphone-app-help

One billion people in the world rely on small farms to make a living, but once pests and diseases occur, their livelihood will be cut off.

Therefore, an NPO called Wadhwani AI uses image classification models to identify pests on farms and make recommendations on which pesticides should be sprayed and when to increase crop yields.

Illegal logging of tropical rainforests is a major factor in climate change. An organization called "Rainforest Connection" uses deep learning to conduct bioacoustic detection. With some old mobile phones, you can track the health of the rainforest and detect threats. .

△ 20 public welfare projects funded by Google

Developer tools build and benefit the researcher community

As the world's largest AI manufacturer, Google is also an open source pioneer and continues to shine for the community. On the one hand, it focuses on TensorFlow.

Jeff Dean said that the past year has been an exciting year for the open source community because of the release of TensorFlow 2.0.

This is the first major upgrade to TensorFlow since its release, making it easier than ever to build ML systems and applications.

Qubit related reports are as follows:

GoogleTF2.0 was released early in the morning!"Change everything and defeat PyTorch"

In TensorFlow Lite, they added support for fast mobile GPU inference; and released Teachable Machine 2.0, which can train a machine learning model with just a button without writing code.

Qubit related reports are as follows:

TensorFlow Lite releases major update!Support mobile GPU, inference speed increased by 4-6 times

There’s also MLIR, an open source machine learning compiler-based tool that addresses the growing complexity of software and hardware fragmentation, making it easier to build AI applications.

At NeurIPS 2019, they demonstrated how to use JAX, an open source high-performance machine learning research system:

https://nips.cc/Conferences/2019

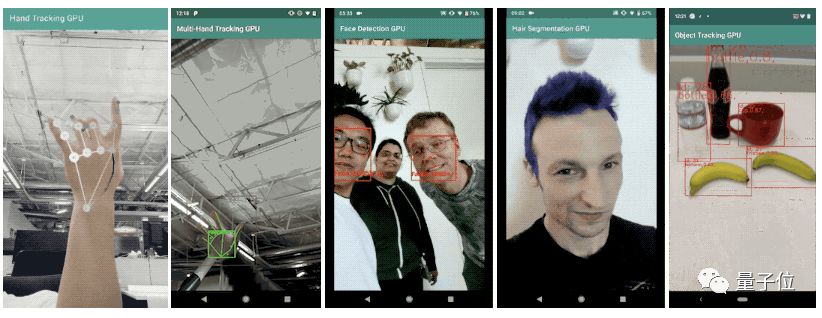

In addition, they also open sourced MediaPipe, a framework for building ML pipelines for perception and multi-modal applications:

https://github.com/google/mediapipe

and XNNPACK, an efficient floating-point neural network inference operator library:

https://github.com/google/XNNPACK

Of course, Google also released some wool for everyone to collect.

Jeff Dean said that as of the end of 2019, they had allowed more than 1,500 researchers around the world to access Cloud TPU for free through the TensorFlow Research Cloud, and their introductory courses on Coursera had more than 100,000 students and so on.

At the same time, he also introduced some "heartwarming" cases, such as with the help of TensorFlow, a college student discovered two new planets and established a method to help others discover more planets.

There are also college students using TensorFlow to identify potholes and dangerous road cracks in Los Angeles.

The other aspect is on open datasets.

Open 11 data sets

After releasing the data set search engine in 2018, Google is still working hard in this area this year and doing its best to contribute to this search engine.

In the past year, Google has opened 11 data sets in various fields. The resources will be released below, please keep it~

Open Images V5, adding segmentation masks to the annotation set, the sample size reached 2.8 million, spanning 350 categories, Qubit reported:

2.8 million samples!Google opens the largest segmentation mask data set in history, starting a new round of challenges

"Natural Questions" dataset, the first dataset to use naturally occurring queries and find answers by reading the entire page, rather than extracting answers from a small paragraph, 300,000 question and answer pairs, BERT can't even reach 70 points, Quantum Bit reports:

Google releases a super-difficult question and answer data set "Natural Questions":Even with 300,000 question and answer pairs, BERT could not reach 70 points.

Dataset for detecting deepfakes:

https://ai.googleblog.com/2019/09/contributing-data-to-deepfake-detection.html

The football simulation environment Google Research Football allows agents to freely play football in this FIFA-like world and learn more football skills. Qubit reports:

Landmark dataset Google-Landmarks-v2: includes 5 million images, with the number of landmarks reaching 200,000, Qubit reports:

YouTube-8M Segments dataset, a large-scale classification and temporal localization dataset, includes human-verified labels at the 5-second segment level of YouTube-8M videos:

https://ai.googleblog.com/2019/06/announcing-youtube-8m-segments-dataset.html

AVA Spoken Activity dataset, a multi-modal audio + visual video perceptual dialogue dataset:

https://research.google.com/ava/

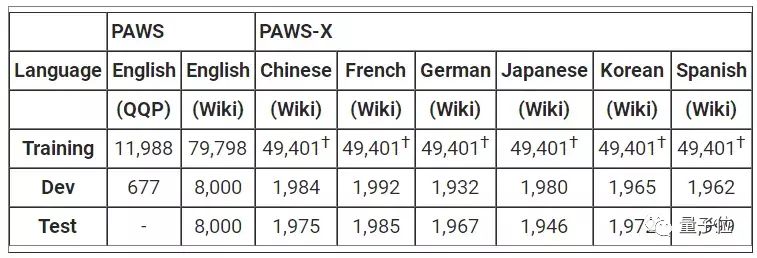

PAWS and PAWS-X: For machine translation, both data sets consist of highly structured sentence pairs with a high degree of lexical overlap between each other. About half of the sentences have corresponding multilingual interpretations:

https://ai.googleblog.com/2019/10/releasing-paws-and-paws-x-two-new.html

A natural language conversation data set that allows two people to have a conversation and simulate human conversation through a digital assistant:

https://ai.googleblog.com/2019/09/announcing-two-new-natural-language.html

Visual Task Adaptation Benchmark: This is the visual task adaptability benchmark launched by Google against GLUE and ImageNet.

Helps users better understand which visual representations can be generalized to more new tasks, thereby reducing data requirements for all visual tasks:

http://ai.googleblog.com/2019/11/the-visual-task-adaptation-benchmark.html

The largest public database of task-oriented conversations, the Pattern-Guided Conversation Dataset, with over 18,000 conversations across 17 domains:

http://ai.googleblog.com/2019/10/introducing-schema-guided-dialogue.html

Global expansion of DIM Research and Google Research

According to Google official statistics, Googlers published 754 papers in the past year.

Jeff Dean also listed some top results:

CVPR has more than 40 papers, ICML has more than 100 papers, ICLR has more than 60 papers, ACL has more than 40 papers, ICCV has more than 40 papers, NeurIPS has more than 120 papers, and so on.

They have also hosted 15 separate workshops at Google on topics ranging from improving global flood warnings, to how to use machine learning to build systems that better serve people with disabilities, to accelerating the development of algorithms for quantum processors (NISQ) , apps and tools, and more.

It has funded more than 50 doctoral students around the world through the annual doctoral scholarship program, and also provided support to start-up companies, etc.

Similarly, Google research locations continued to expand globally in 2019, opening a research office in Bangalore. At the same time, Jeff Dean also issued a recruitment request: If you are interested, come to the bowl quickly~

Artificial Intelligence Ethics

As in previous years, at the beginning of this report, Jeff actually first talked about Google’s work on artificial intelligence ethics.

This is also Google’s clear declaration on AI practices, ethics, and technology for good.

In 2018, Google released seven principles of AI and launched application practices around these principles. In June 2019, Google handed over a report card showing how to put these principles into practice in research and product development.

Report link:

https://www.blog.google/technology/ai/responsible-ai-principles/

Jeff Dean said that because these principles basically cover the most active areas in artificial intelligence and machine learning research, such as bias, security, fairness, reliability, transparency and privacy in machine learning systems, etc.

Therefore, Google's goal is to apply technologies in these fields to work and continue to conduct research to continue to advance the development of related technologies.

On the one hand, Google has also published a number of papers at academic conferences such as KDD'19 and AIES 19 to discuss the fairness and interpretability of machine learning models.

For example, research on how Activation Atlases can help explore the behavior of neural networks and how it can help the interpretability of machine learning models.

Related Links:

Exploring Neural Networks with Activation Atlases

https://ai.googleblog.com/2019/03/exploring-neural-networks.html

On the other hand, Google's efforts have also been put into practice and it has actually produced excellent products.

For example, TensorFlow Privacy was released to help train privacy-guaranteed machine learning models.

Related Links:

Introducing TensorFlow Privacy: Learning with Differential Privacy for Training Data

https://blog.tensorflow.org/2019/03/introducing-tensorflow-privacy-learning.html

Additionally, Google has released a new dataset to aid research in identifying deepfakes.

Related Links:

Contributing Data to Deepfake Detection Research

https://ai.googleblog.com/2019/09/contributing-data-to-deepfake-detection.html

Looking ahead to 2020 and beyond

Finally, Jeff also looked forward to the research trends in 2020 and beyond based on the development process of the past 10 years.

He said that in the past decade, the fields of machine learning and computer science have made remarkable progress, and we now have computers more capable than ever before of seeing, hearing and understanding language.

With sophisticated computing devices in our pockets, these capabilities can be harnessed to better assist us with many tasks in our daily lives.

We've redesigned our computing platform around these machine learning methods by developing specialized hardware that allows us to tackle larger problems.

These have changed the way we think about computing devices in data centers, and the deep learning revolution will continue to reshape how we think about computing and computers.

At the same time, he also pointed out that there are still a lot of unresolved questions. This is also Google’s research direction in 2020 and beyond:

First, how to build a machine learning system that can handle millions of tasks and successfully complete new tasks automatically?

Second, how can we achieve state-of-the-art progress in important areas of AI research, such as avoiding bias, improving explainability and understandability, improving privacy, and ensuring security?

Third, how can computing and machine learning be applied to make progress in important new areas of science? Such as climate science, healthcare, bioinformatics and many other fields.

Fourth, regarding the ideas and directions pursued by the machine learning and computer science research communities, how to ensure that more diverse researchers propose and explore them? How can we best support new researchers from diverse backgrounds entering the field?

Finally, what do you think of the breakthroughs and progress of Google AI in the past year?

Welcome to interact in the message area~

Report portal:

https://ai.googleblog.com/2020/01/google-research-looking-back-at-2019.html

Google 2019 paper portal:

https://research.google/pubs/?year=2019

The author is a signed author of NetEase News·NetEase account "Everyone has their own attitude"